Hybrid AI Models to Detect Disinformation

The CLAIM project addresses growing concerns about false and misleading claims in global information networks, using hybrid AI as a key ally. Such claims reflect the ever-growing number of online actors competing for attention and trying to shape public opinion. As a result, global information networks are at a critical junction. In its 2024 survey, the International Panel on the Information Environment (IPIE) found that a majority of experts are concerned that Artificial Intelligence (AI) perpetuates biases.

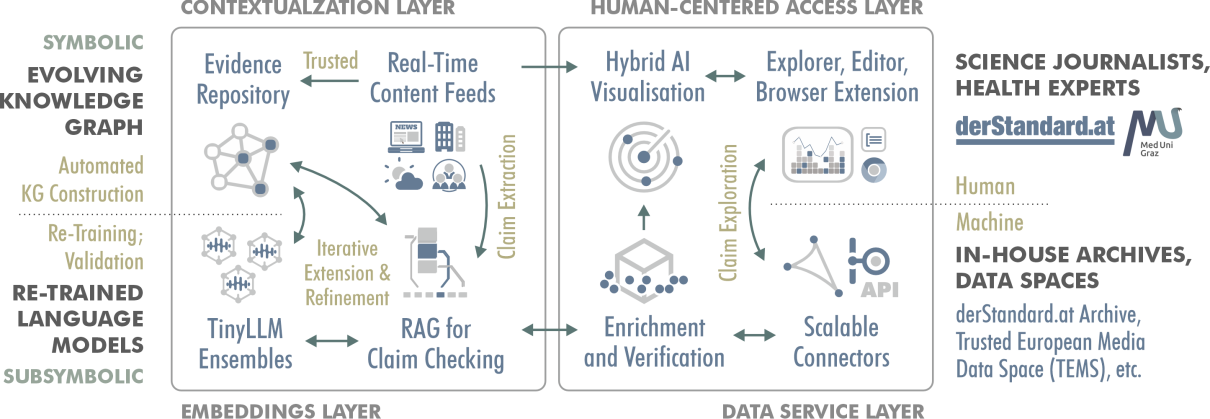

CLAIM will address this challenge by developing hybrid AI models combining symbolic and subsymbolic components. Thereby, the human-centered project aims to combat the twin problems of online claims: misinformation (the inadvertent spread of false information) and disinformation (the intentional spread of false information with the intent to mislead).

Hybrid AI Use Cases – Science Journalism and Mental Health

The project will involve end-users in two use cases: science journalism and stigma around mental health disorders. The chosen use cases are particularly affected by false claims. CLAIM will streamline claim-checking workflows and help with complex and often contested issues. For this purpose, it will provide tools for journalists, mental health researchers, educators, and content creators to counter claims that lead to stigmatization and false beliefs.

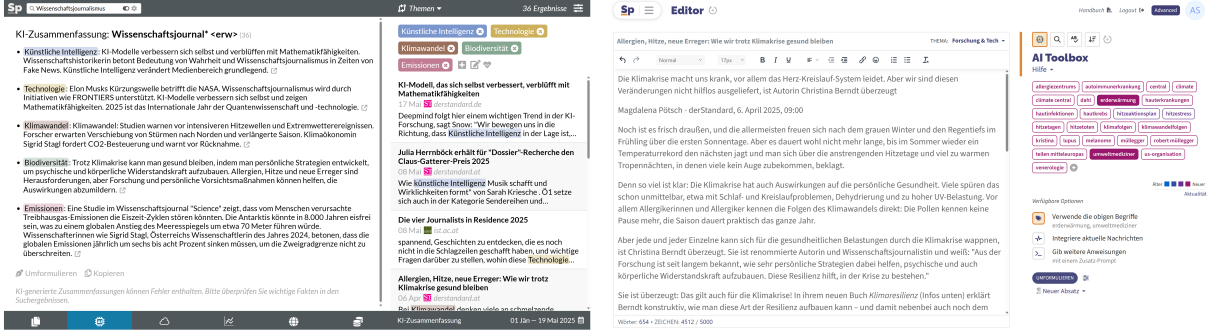

webLyzard Dashboard with AI Summaries of science journalism coverage (left). Storypact AI Editor with derStandard climate crisis article (right; in German).

Visualizations will help these users track the origins and propagation of false claims and identify trusted sources with verified information that refutes these claims. CLAIM will integrate these visualizations into three Web applications that support all phases of the content production workflow:

- Visual Claim Explorer to track evolving claims and their context,

- Text Editor to author articles with hybrid AI using trusted sources, and

- Browser Extension to test claims in real time.

Hybrid AI Models to Combat False Claims

To utilize AI for claim verification, the models used must be truthful and trustworthy. While Large Language Models (LLMs) have demonstrated superior capabilities to deal with the subtle nuances of human language, they remain prone to so-called hallucinations. Common LLMs lack an objective sense of what is useful, real, or true. CLAIM’s seamless integration of symbolic and subsymbolic components will address this shortcoming.

For this purpose, the team will first train models on content from trusted sources to improve factual accuracy and reduce hallucinations. Next, they will implement evolving knowledge graphs, which, in turn, provide the models with common sense and domain knowledge. Additionally, they will use Retrieval Augmented Generation (RAG) to integrate recent and trusted information. For the subsymbolic embeddings layer, CLAIM will use knowledge distillation techniques to train smaller language models for specific tasks. This will reduce their computational requirements and energy footprint.

CLAIM’s hybrid AI models will be explainable and cross-lingual. They will help detect, classify, and verify claims. These features will be available as data services to facilitate their integration with external data spaces and applications. Classifying claims by IPTC NewsCodes and the Sustainable Development Goals (SDGs) will highlight interdependencies and enable analysts to estimate the impact of a misleading claim across SDGs.

Connecting Technology, Journalism and Healthcare

The composition of the consortium reflects the project’s human-centered and interdisciplinary nature. Complementary skills will help build hybrid AI models that reveal the origins of false and misleading claims. Together, the project partners will uncover how claims spread while also identifying ways journalists and content creators can mitigate their impact.

webLyzard technology has been working at the intersection of AI, sustainability, and climate change communication for over 15 years. It will lead the project’s technical development, collaborating with the deep tech startup Storypact to provide content authors with Generative AI (GenAI) features. Two use case partners will ensure transdisciplinary excellence. The STANDARD is a major Austrian news medium known for in-depth research and accurate coverage of complex issues. The Medical University of Graz is the scientific partner leading the work on health literacy and destigmatization strategies.

CLAIM Project Overview

- Project Start: 01 May 2025 (until April 2028).

- Coordinator: webLyzard technology; Project Partners: Medical University of Graz, Der Standard, Storypact.

- Funding: CLAIM is supported by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility and Technology (BMK) through the AI for Tech & AI for Green Programme – FFG No FO999923561.